Prof. Naghmeh Karimi funded to study computing-in-memory AI accelerators

Naghmeh Karimi, an associate professor in the Department of Computer Science and Electrical Engineering, was recently granted more than $300,000 in funding from the Semiconductor Research Corporation (SRC) to study the security of promising hardware components that speed up the computing process.

SRC brings together technology companies, academics, and government agencies to tackle large scientific and technical challenges, and Karimi’s research will be funded by three leading technology companies: IBM-Research, AMD, and Siemens.

Karimi and her team, including graduate students and a collaborator from Arizona State University, will study computer chips whose design and structure allows computing-in-memory (CiM), where data processing happens directly within the computer’s memory. CiM architectures are promising for speeding up the use of machine learning algorithms because they consume less energy.

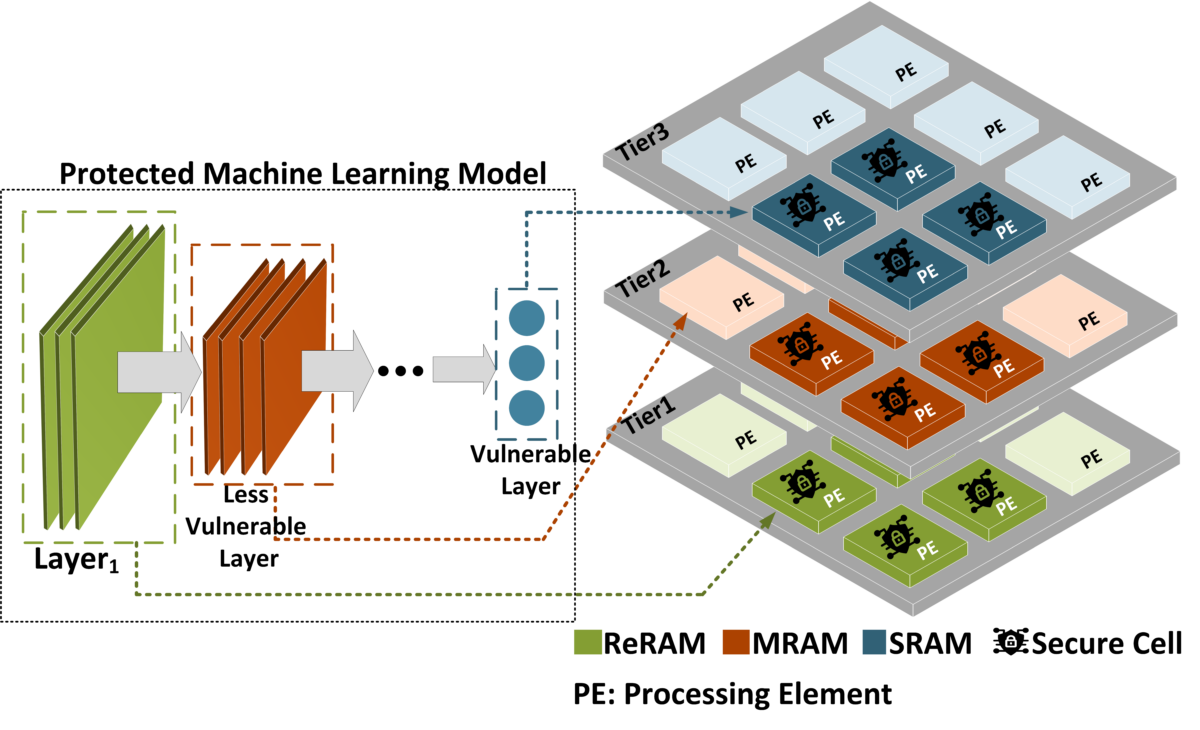

Different types of CiM devices (such as RRAM, MRAM, and SRAM) each have their own strengths and weaknesses in terms of performance, power use, and size, and to get the best results, engineers need to combine different CiM devices into one system. Building these systems in 3D layers can further improve their efficiency and performance. However, the security of these 3D architectures has received little attention to date.

Karimi and her team will study the security of computing-in-memory architectures, as shown in this project overview. (Image courtesy of Karimi)

Karimi and her team will study the security of computing-in-memory architectures, as shown in this project overview. (Image courtesy of Karimi)Karimi’s team will study the security of 3D CiM technologies used in AI applications. In particular, the research will focus on evaluating the security vulnerabilities of the technologies and developing mitigation strategies.

“I’m excited about this project because the topic is very timely,” says Karimi. “The support from three leading companies in the AI field shows the importance of the problem and the promise of the solutions we are working on.”

The team aims to enhance the security of CiM-based AI accelerators against physical attacks that adversaries might launch to leak sensitive data or induce malfunctions. The researchers will work closely with the funding companies over the next three years in this area.

This post was written by Catherine Meyers and originally published online here.

Posted: January 21, 2025, 4:27 PM