Prof. Cynthia Matuszek receives prestigious NSF CAREER award

Focus on improving robots' ability to interact with people

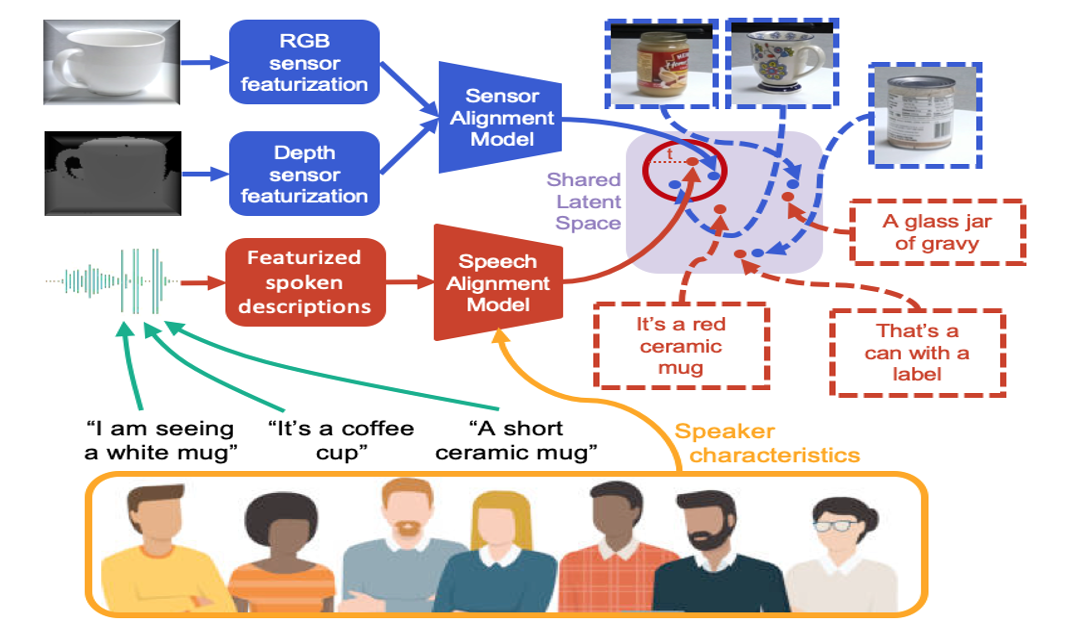

"The goal of this project is to allow robots to learn to understand spoken instructions and information about the world directly from speech with end users. Modern robots are small and capable, but not adaptable enough to perform the variety of tasks people may require. Meanwhile, too many machine learning systems work poorly for people from under-represented groups. The research will use physical, real-world context to enable learning directly from speech, including constructing a data set that is large, realistic, and inclusive of speakers from diverse backgrounds.

As robots become more capable and ubiquitous, they are increasingly moving into traditionally human-centric environments such as health care, education, and eldercare. As robots engage in tasks as diverse as helping with household work, deploying medication, and tutoring students, it becomes increasingly critical for them to interact naturally with the people around them. Key to this progress is the development of robots that acquire an understanding of goals and objects from natural communications with a diverse set of end-users. One way to address this is using language to build systems that learn from people they are interacting with. Algorithms and systems developed in this project will allow robots to learn about the world around them from linguistic interactions. This research will focus on understanding spoken language about the physical world from diverse groups of people, resulting in systems that are more able to robustly handle a wide variety of real-world interactions. Ultimately, the project will increase the usability and fairness of robots deployed in human spaces.

This CAREER project will study how robots can learn about noisy, unpredictable human environments from spoken language combined with perception, using context derived from sensors to constrain the learning problem. Grounded language refers to language that occurs in and refers to the physical world in which robots operate. Human interactions are fundamentally contextual: when learning about the world, we focus on learning by considering not only direct communication but also the context of that interaction. This work will focus on learning semantics directly from perceptual inputs combined with speech from diverse sources. The goal is to develop learning infrastructure, algorithms, and approaches to enable robots to learn to understand task instructions and object descriptions from spoken communication with end users. The project will develop new methods of efficiently learning from multi-modal data inputs, with the ultimate goal of enabling robots to efficiently and naturally learn about their world and the tasks they should perform."

Posted: March 16, 2022, 1:20 PM