PhD defense: Z. Wang, Learning Representations and Modeling Temporal Signals

Computer Science PhD Dissertation Defense

Learning Representations and Modeling Temporal Signals:

Symbolic Approximation, Deep Learning, Optimization and Beyond

Zhiguang Wang

1:00pm Tuesday, 31 May 2016, ITE 325, UMBC

Most real-world data has a temporal component, whether it is measurements of natural or man-made phenomena. Specifically, complex, high-dimensional and noisy temporal data are often difficult to model because the intrinsic temporal/topographic structures are highly non-linear, which makes the learning and optimization procedure more complicated. This talk will cover three correlated but self-contained topics to address the problem of representation learning in time series, deep learning optimization, and unsupervised feature learning.

First, I will show how to incorporate ideas from symbolic approximation with simple NLP techniques to represent and model temporal signals. To improve the symbolic approximation to model signals as words, we build a time-delay embedding vector (AKA skip gram) to extract the dependencies at different time scales, which yields state-of-the-art classification performance with a bag-of-patterns and vector space model. A non-parametric pooling/weighting scheme is proposed to extend the methods to multivariate signals

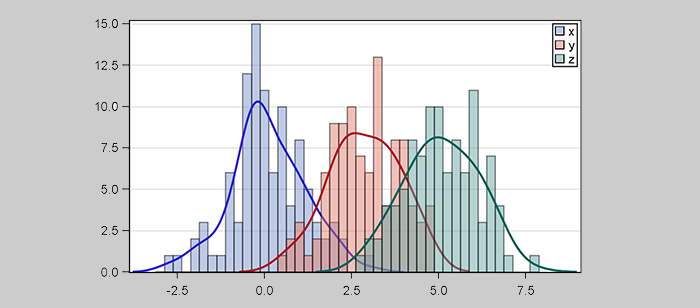

Second, I will show how to encode signals as images to learn and analyze them with deep learning methods. The Gramian Angular Field (GAF) and Markov Transition Field (MTF), as two novel approaches to encode both multi-scale spatial correlation and first order Markov dynamics of the temporal signals as images, are proposed. These visual representations are proved to work well in both visualizations by humans and pattern recognition using deep learning approaches. This work yields state-of-the-art algorithms for temporal data classification and imputation.

Finally, deep learning in image recognition (e.g. pictures or GAF/MTF images) involves high-dimensional non-convex optimization. Such optimization is generally intractable. However, I show how to use a set of exponential form based error estimators (NRAE/NAAE) and learning approaches (Adaptive Training) to attack the non-convex optimization problems in training deep neural networks. Both in theory and practice, they are able to achieve optimality on accuracy and robustness against outliers/noise. They provide another perspective to address the non-convex optimization problem (especially saddle points) in deep learning.

Committee: Tim Oates (Chair), Matt Schmill (Miner & Kasch), Hamed Pirsiavash, Yun Peng, Kostas Kalpakis

Posted: May 30, 2016, 9:55 PM