talk: Talking to Robots, 1pm Mon 4/7 ITE325b

Talking to Robots: Learning to Ground Human

Language in Robotic Perception

Cynthia Matuszek

University of Washington

1:00pm Monday, 7 April 2014, ITE325b, UMBC

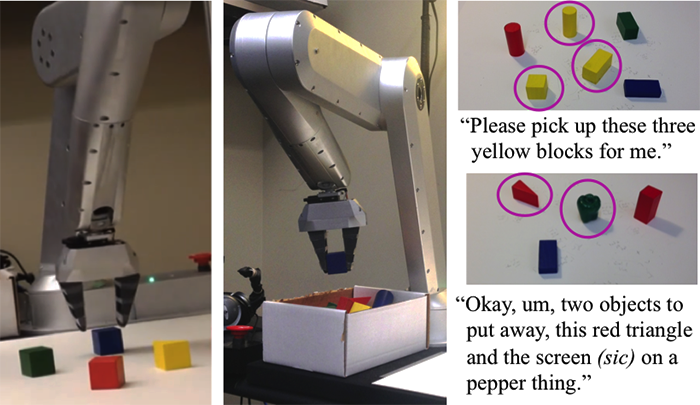

Advances in computation, sensing, and hardware are enabling robots to perform an increasing variety of tasks in ever less constrained settings. It is now possible to imagine robots that can operate in traditionally human-centric settings. However, such robots need the flexibility to take instructions and learn about tasks from nonspecialists using language and other natural modalities. At the same time, learning to process natural language about the physical world is difficult without a robot’s sensors and actuators. Combining these areas to create useful robotic systems is a fundamentally multidisciplinary problem, requiring advances in natural language processing, machine learning, robotics, and human-robot interaction. In this talk, I describe my work on learning natural language from end users in a physical context; such language allows a person to communicate their needs in a natural, unscripted way. I demonstrate that this approach can enable a robot to follow directions, learn about novel objects in the world, and perform simple tasks such as navigating an unfamiliar map or putting away objects.

Cynthia Matuszek is a Ph.D. candidate in the University of Washington Computer Science and Engineering department, where she is a member of both the Robotics and State Estimation lab and the Language, Interaction, and Learning group. She earned a B.S. in Computer Science from the University of Texas at Austin, and M.Sc. from the University of Washington. She is published in the areas of artificial intelligence, robotics, ubiquitous computing, and human-robot interaction.

Posted: April 5, 2014, 3:23 PM