MS defense, Budhraja: Neuroevolution-Based Inverse Reinforcement Learning

M.S. Thesis Defense

Neuroevolution-Based Inverse Reinforcement Learning

Karan K. Budhraja

9:00am Wednesday, 2 December 2015, ITE 346

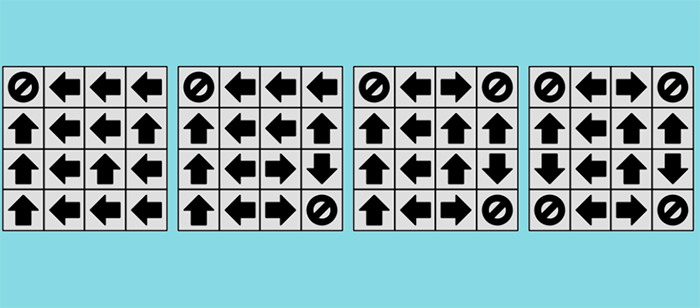

Motivated by such learning in nature, the problem of Learning from Demonstration is targeted at learning to perform tasks based on observed examples. One of the approaches to Learning from Demonstration is Inverse Reinforcement Learning, in which actions are observed to infer rewards. This work combines a feature based state evaluation approach to Inverse Reinforcement Learning with neuroevolution, a paradigm for modifying neural networks based on their performance on a given task. Neural networks are used to learn from a demonstrated expert policy and are evolved to generate a policy similar to the demonstration. The algorithm is discussed and evaluated against competitive feature-based Inverse Reinforcement Learning approaches. At the cost of execution time, neural networks allow for non-linear combinations of features in state evaluations. These valuations may correspond to state value or state reward. This results in better correspondence to observed examples as opposed to using linear combinations.

This work also extends existing work on Bayesian Non-Parametric Feature construction for Inverse Reinforcement Learning by using non-linear combinations of intermediate data to improve performance. The algorithm is observed to be specifically suitable for a linearly solvable non-deterministic Markov Decision Processes in which multiple rewards are sparsely scattered in state space. Performance of the algorithm is shown to be limited by parameters used, implying adjustable capability. A conclusive performance hierarchy between evaluated algorithms is constructed.

Committee: Drs. Tim Oates, Cynthia Matuszek and Tim Finin

Posted: December 1, 2015, 11:06 PM